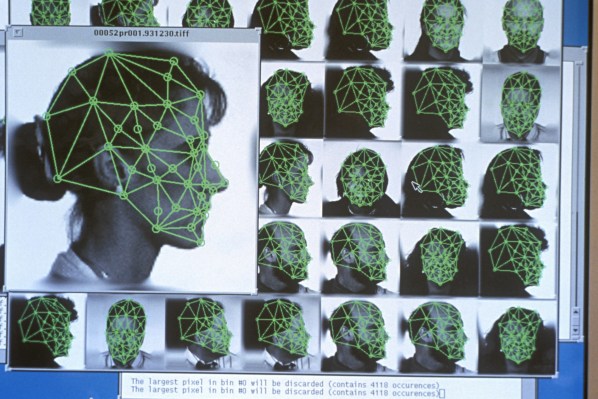

Facial recognition technology presents myriad opportunities as well as risks, but it seems like the government tends to only consider the former when deploying it for law enforcement and clerical purposes. Senator Kamala Harris (D-CA) has written the Federal Bureau of Investigation, Federal Trade Commission, and Equal Employment Opportunity Commission telling them they need to get with the program and face up to the very real biases and risks attending the controversial tech. In three letters provided to TechCrunch (and embedded at the bottom of this post), Sen. Harris, along with several other notable legislators, pointed out recent research showing how facial recognition can produce or reinforce bias, or otherwise misfire. This must be considered and accommodated in the rules, guidance, and applications of federal agencies. Other lawmakers and authorities have sent letters to various companies and CEOs or held hearings, but representatives for Sen. Harris explained that there is also a need to advance the issue within the government as well. Sen. Harris at a recent hearing. Attention paid to agencies like the FTC and EEOC that are “responsible for enforcing fairness” is “a signal to companies that the cop on the beat is paying attention, and an indirect signal that they need to be paying attention too. What we’re interested in is the fairness outcome rather than one particular company’s practices.” If this research and the possibility of poorly controlled AI systems aren’t considered in the creation of rules and laws, or in the applications and deployments of the technology, serious harm could ensue. Not just positive harm, such as the misidentification of a suspect in a crime, but negative harm, such as calcifying biases in data and business practices in algorithmic form and depriving those affected by the biases of employment or services. Algorithmic accountability “While some have expressed hope that facial analysis can help reduce human biases, a growing body of evidence indicates that it may actually amplify those biases,” the letter to the EEOC reads. Here Sen. Harris, joined by Senators Patty Murray (D-WA) and Elisabeth Warren (D-MA), expresses concern over the growing automation of the employment process. Recruitment is a complex process and AI-based tools are being brought in at every stage, so this is not a theoretical problem. As the letter reads: Suppose, for example, that an African American woman seeks a job at a company that uses facial analysis to assess how well a candidate’s mannerisms are similar to those of its top managers. First, the technology may interpret her mannerisms less accurately than a white male candidate. Second, if the company’s top managers are homogeneous, e.g., white and male, the very characteristics being sought may have nothing to do with job performance but are instead artifacts of belonging to this group. She may be as qualified for the job as a white male candidate, but facial analysis may not rate her as highly becuase her cues naturally differ. Third, if a particular history of biased promotions led to homogeneity in top managers, then the facial recognition analysis technology could encode and then hide this bias behind a scientific veneer of objectivity. If that sounds like a fantasy use of facial recognition, you probably haven’t been paying close enough attention. Besides, even if it’s still rare, it makes sense to consider these things before they become widespread problems, right? The idea is to identify issues inherent to the technology. “We request that the EEOC develop guidelines for employers on the fair use of facial analysis technologies and how this technology may violate anti-discrimination law,” the Senators ask. A set of questions also follows (as it does in each of the letters): have there been any complaints along these lines, or are there any obvious problems with the tech under current laws? If facial technology were to become mainstream, how should it be tested, and how would the EEOC validate that testing? Sen. Harris and the others request a timeline of how the Commission plans to look into this by September 28. Next on the list is the FTC. This agency is tasked with identifying and punishing unfair and deceptive practices in commerce and advertising; Sen. Harris asserts that the purveyors of facial recognition technology may be considered in violation of FTC rules if they fail to test or account for serious biases in their systems. “Developers rarely if ever test and then disclose biases in their technology,” the letter reads. “Without information about the biases in a technology or the legal and ethical risks attendant to using it, good faith users may be unintentionally and unfairly engaging in discrimination. Moreover, failure to disclose these biases to purchasers may be deceptive under the FTC Act.” Another example is offered: Consider, for example, a situation in which an African American female in a retail store is misidentified as a shoplifter by a biased facial recognition technology and is falsely arrested based on this information. Such a false arrest can cause trauma and substantially injure her future house, employment, credit, and other opportunities. Or, consider a scenario in which a young man with a dark complexion is unable to withdraw money from his own bank account because his bank’s ATM uses facial recognition technology that does not identify him as their customer. Again, this is very far from fantasy. On stage at Disrupt just a couple weeks ago Chris Atageka of UCOT and Timnit Gebru from Microsoft Research discussed several very real problems faced by people of color interacting with AI-powered devices and processes. The FTC actually had a workshop on the topic back in 2012. But, amazing as it sounds, this workshop did not consider the potential biases on the basis of race, gender, age, or other metrics. The agency certainly deserves credit for addressing the issue early, but clearly the industry and topic have advanced and it is in the interest of the agency and the people it serves to catch up. The letter ends with questions and a deadline rather like those for the EEOC: have there been any complaints? How will they assess address potential biases? Will they issue “a set of best practices on the lawful, fair, and transparent use of facial analysis?” The letter is cosigned by Senators Richard Blumenthal (D-CT), Cory Booker (D-NJ), and Ron Wyden (D-OR). Last is the FBI, over which Sen. Harris has something of an advantage: the Government Accountability Office issued a report on the very topic of facial recognition tech that had concrete recommendations for the Bureau to implement. What Harris wants to know is, what have they done about these, if anything? “Although the GAO made its recommendations to the FBI over two years ago, there is no evidence that the agency has acted on those recommendations,” the letter reads. FBI built a massive facial recognition database without proper oversight The GAO had three major recommendations. Briefly summarized: do some serious testing of the Next Generation Identification-Interstate Photo System (NGI-IPS) to make sure it does what they think it does, follow that with annual testing to make sure it’s meeting needs and operating as intended, and audit external facial recognition programs for accuracy as well. “We are also eager to ensure that the FBI responds to the latest research, particularly research that confirms that face recognition technology underperforms when analyzing the faces of women and African Americans,” the letter continues. The list of questions here is largely in line with the GAO’s recommendations, merely asking the FBI to indicate whether and how it has complied with them. Has it tested NGI-IPS for accuracy in realistic conditions? Has it tested for performance across races, skin tones, genders, and ages? If not, why not, and when will it? And in the meantime, how can it justify usage of a system that hasn’t been adequately tested, and in fact performs poorest on the targets it is most frequently loosed upon? The FBI letter, which has a deadline for response of October 1, is cosigned by Sen. Booker and Cedric Richmond, Chair of the Congressional Black Caucus. These letters are just a part of what certainly ought to be a government-wide plan to inspect and understand new technology and how it is being integrated with existing systems and agencies. The federal government moves slowly, even at its best, and if it is to avoid or help mitigate real harm resulting from technologies that would otherwise go unregulated it must start early and update often. You can find the letters in full below. EEOC: SenHarris – EEOC Facial Rec… by on Scribd FTC: SenHarris – FTC Facial Reco… by on Scribd FBI: SenHarris – FBI Facial Reco… by on Scribd source: https://techcrunch.com/2018/09/18/sen-harris-tells-federal-agencies-to-get-serious-about-facial-recognition-risks/ #Headlines by: Devin Coldewey

Original Post: https://techcrunch.com/2018/09/18/sen-harris-tells-federal-agencies-to-get-serious-about-facial-recognition-risks/

Original Post: https://techcrunch.com/2018/09/18/sen-harris-tells-federal-agencies-to-get-serious-about-facial-recognition-risks/

No comments:

Post a Comment